Artificial Intelligence: truth vs fiction

With any exciting new tech development comes breathless predictions for what it can accomplish. But alongside that are the sales pitches and deals that, frankly, are often based on overstatement and hype. Artificial Intelligence (or AI for short) has been no exception. And while the notion initially emerged as far back as the mid-1950s, it wasn’t until this past decade that interest, particularly in the business applications of AI, has truly gone viral. In July of 2020, a group of researchers released the AI Myth, a research-based website developed to help “disentangle and debunk” some of the myths, misconceptions and inaccuracies surrounding AI. Following is a brief of overview of two of the site’s key points.

Two of most common myths surrounding AI are that, a) it’s objective and unbiased, and b) that it can solve any problem.

Given the many studies that have demonstrated the levels of unconscious bias in human decision making, the idea that AI can overcome bias definitely has appeal. However, what this ignores is that in many instances the datasets that have been used to help the AI platform ‘learn’ may already be biased.

Indeed there are have been numerous publicised examples of AI bias: Amazon’s hiring system favouring men is one of the most notorious in recent years. A dataset with an encoded bias (like most resumes being from men), skews the perception of a good candidate. While a dataset on childhood neglect that only includes information on families that access state services might assume no child from a wealthy family could be neglected.

Alternatively, a dataset might take a proxy measure that also skews the outcome. For example, YouTube wants to find the most interesting, high quality content that it can. But it can’t measure qualitatively, so it settles for surrogate measures of clicks and time spent on video. This can end up prioritising sensationalist content and conspiracy theories, simply because they get more clicks.

The reality is that AI systems are still limited by the data inputs and algorithms they are programmed to abide by. So as we like to say in marketing, garbage in, garbage out.

The second myth was that AI can solve any problem. The fact is there are some tasks AI excels at, and others that are beyond its capability. The site takes a look at a research piece developed by a Stanford professor titled, How to recognise AI snake oil, which looks at the limitations of machine learning-based AI by exploring three types of problems it is commonly used to solve.

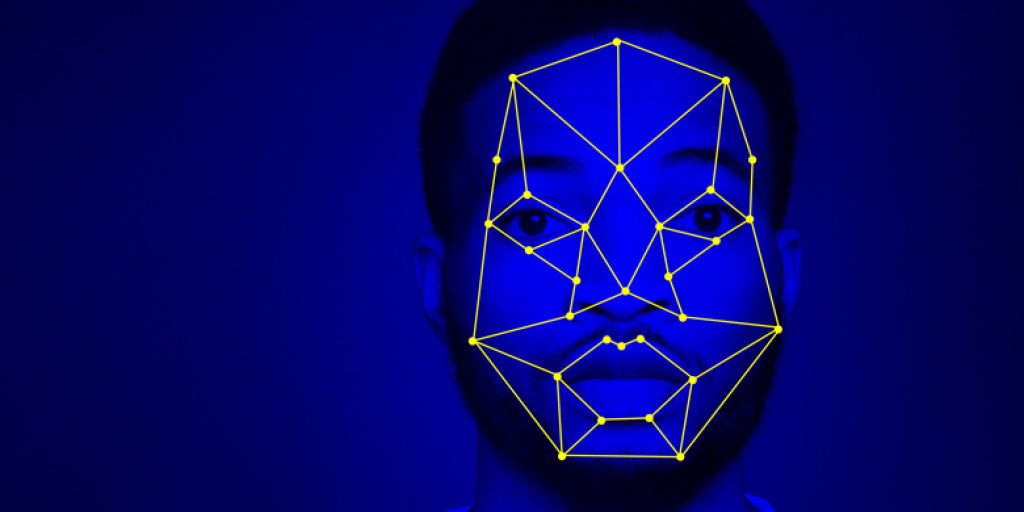

Firstly, perception problems, where there is truth to measure predictions against. Transcribing speech to text is a good example of this, as are facial recognition tasks. With enough data and computing power, the idea that AI can solve any of these problems does hold some water. But data quality is also a key consideration, and the biases we touched on earlier can play a part in undermining AI’s accuracy in this area.

The second problem is automating judgement, where we get machine learning to make certain judgements by feeding it a sufficient number of examples. A spam filter is a good example of this. However, the system fails where there are polarising or contentious definitions of what it’s examining (identifying hate speech or fake news, for example). Where a wide spectrum exists, there is no way for the system to satisfy on all levels, and so it is fundamentally flawed.

The last area examined was predicting social outcomes, where ML systems are being developed to make predictions around areas such as criminal recidivism, terrorism, even at-risk children for social intervention. This area is fraught with issues (Minority Report, anyone?), and indeed the results of the majority of these studies has been less that spectacular. Yet interest and investment continues, and as the researched noted, “we seem to have decided to suspend common sense when AI is involved.”

These are just two of the areas examined by this fascinating site, undoubtedly one of the most accessible explorations on technology we’ve come across. And yet the outtake is still one where common sense must prevail. If you’re hearing a pitch about an AI system that can determine your marketing strategy, develop your creative, or plan your media, stop and think. Just because you can use AI, doesn’t mean you should. As the AI myth notes, machine learning cannot replace human nuance.

AI is a tool, and like any tool, it’s only as good as the way it’s used.

For more debunked myths about AI, visit: https://www.aimyths.org/. And for an in-depth conversation about the epistemology of AI, email caspar.y@affinity.ad